This article explains how the process of doing performance measurements of an OSB Proxy Service and presenting them in a “performance analysis document” was partly automated. After running a SoapUI based Test Step (sending a request to the service), extracting the service performance metrics was done by using the ServiceDomainMBean in the public API of the Oracle Service Bus. These service performance metrics can be seen in the Oracle Service Bus Console via the Service Monitoring Details. Furthermore this article explains how these service performance metrics are used by a PowerPoint VBA module and slide with placeholders, to generate an image, using injected service performance metric values. This image is used to present the measurements in a “performance analysis document”.

Performance issues

In a web application we had performance issues in a page where data was being shown that was loaded using a web service (deployed on Oracle Service Bus 11gR1). In the web page, an application user can fill in some search criteria and when a search button is pressed, data is being retrieved (from a database) , via the MyProxyService, and shown on the page in table format.

![Web application Web application]()

Performance analysis document

Based on knowledge about the data, the business owner of the application, put together a number of test cases that should be used to do performance measurements, in order to determine if the performance requirements are met. All in all there were 9 different test cases. For some of these test cases, data was being retrieved for example concerning a period of 2 weeks and for others a period of 2 months.

Because it was not certain what caused the lack of performance, besides the front-end, also the back-end OSB Proxy Service was to be investigated and performance measurement results were to be documented (in the “performance analysis document ”). It was known from the start that once the problem was pinpointed and a solution was chosen and put in place, again performance measurements should be carried out and the results were again to be documented.

The “performance analysis document ” is the central document, used by the business owner of the application and a team of specialists, to be the basis for choosing solutions for the lack of performance in the web page. It contains an overview of all the measurements that were done (front-end and also the back-end), used software, details about the services in question, performance requirements, an overview of the test cases that were used, a summary, etc.

Because a picture says more than a thousand words, in the “performance analysis document”, the OSB Proxy Service was represented as shown below (the real names are left out). For each of the 9 test case’s such a picture was used.

![Picture used in the performance analysis document Picture used in the performance analysis document]()

The OSB Proxy Service (for this article renamed to MyProxyService) contains a Request Response Pipeline with several Stages, Pipeline Pairs, a Route and several Service Callouts. For each component a response time is presented.

Service Monitoring Details

In the Oracle Service Bus Console, Pipeline Monitoring was enabled (at Action level or above) via the Operational Settings | Monitoring of the MyProxyService.

![Enabled Pipeline Monitoring Enabled Pipeline Monitoring]()

Before a test case was started in the Oracle Service Bus Console, the Statistics of the MyProxyService where reset (by hand).

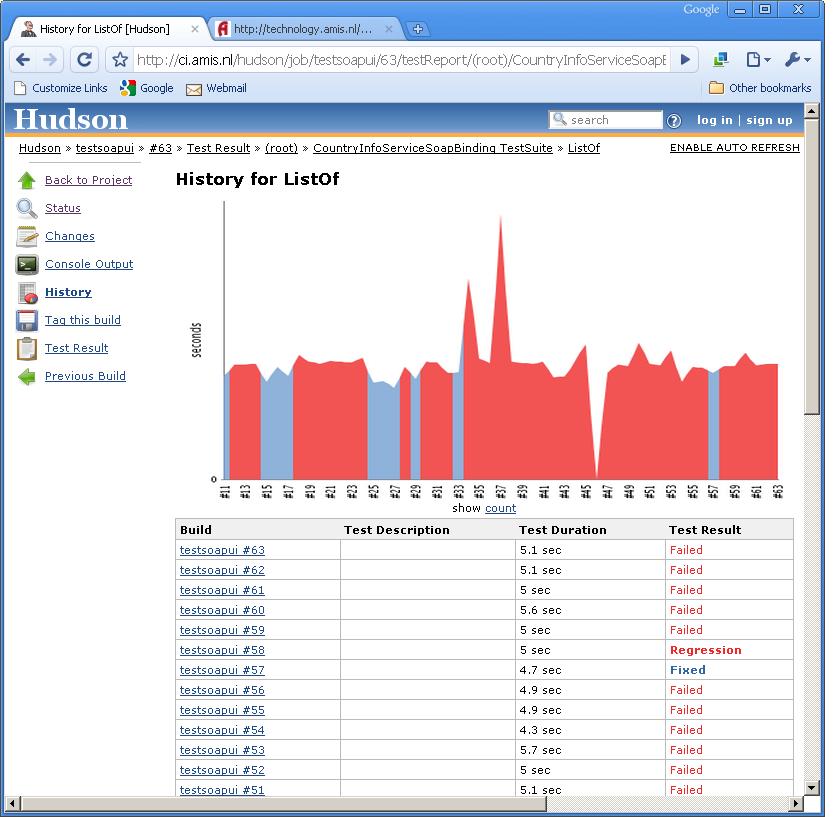

All the 9 test cases (requests with different search criteria) were set up in SoapUI, in order to make it easy to repeat them. To get average performance measurements, per test case, a total of 5 calls (requests) were executed. For the MyProxyService, the results of these 5 calls, were investigated in the Oracle Service Bus Console via the Service Monitoring Details.

![Service Monitoring Details Service Monitoring Details]()

In the example shown above, based on the message count of 5, the overall average response time is 820 msecs. The Service Metrics tab displays the metrics for a proxy service or a business service. The Pipeline Metrics tab (only available for proxy services) gives information on various components of the pipeline of the service. The Action Metrics tab (only available for proxy services) presents information on actions in the pipeline of the service, displayed as a hierarchy of nodes and actions.

At first the Service Monitoring Details (of the Oracle Service Bus Console) for a particular test case were copied by hand into a PowerPoint slide and from there a picture was created, that was then copied in to the “performance analysis document” at the particular test case paragraph.

Because of the number of measurements that had to be made for the “before situation” and the “after situation” (when the solution was put in place), it was decided to partly automate this process. Also with future updates in mind of the MyProxyService code, it was anticipated that after each update, the performance measurements for the 9 test cases were to be carried out again.

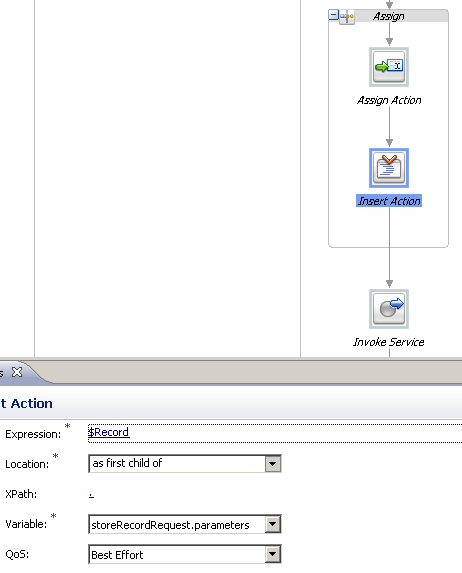

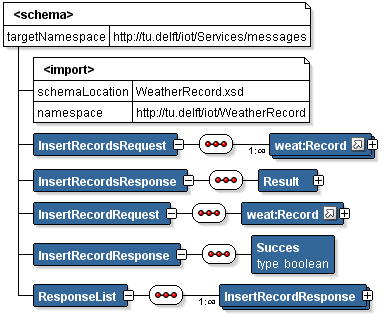

Overview of the partly automated process

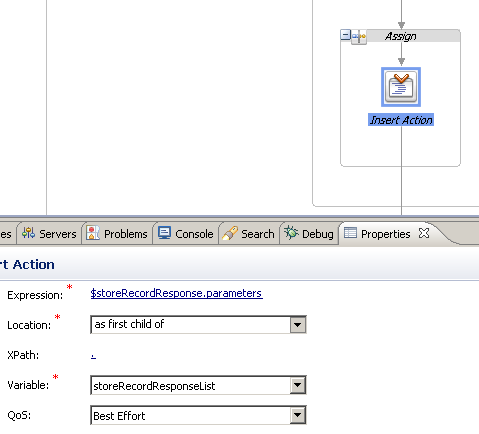

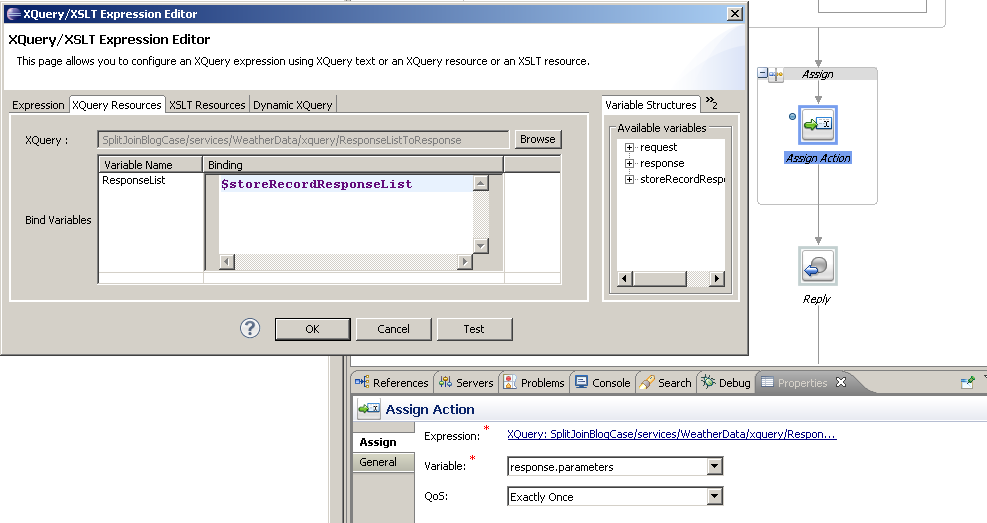

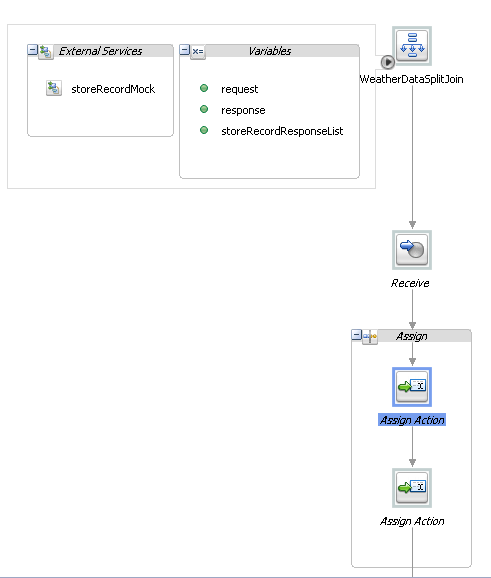

![Overview Overview]() In the partly automated process, an image is derived from a PowerPoint slide and a customized VBA module. Office applications such as PowerPoint have Visual Basic for Applications (VBA), a programming language that lets you extend those applications. The VBA module reads data from a text file (MyProxyServciceStatisticsForPowerpoint.txt) and replaces certain text frames (placeholders, for example CODE_Enrichment_request_elapsed-time) on the slide, with data from the text file and in the end exports the slide to an image (png file). The image can then easily be inserted in the “performance analysis document” at the particular test case paragraph.

In the partly automated process, an image is derived from a PowerPoint slide and a customized VBA module. Office applications such as PowerPoint have Visual Basic for Applications (VBA), a programming language that lets you extend those applications. The VBA module reads data from a text file (MyProxyServciceStatisticsForPowerpoint.txt) and replaces certain text frames (placeholders, for example CODE_Enrichment_request_elapsed-time) on the slide, with data from the text file and in the end exports the slide to an image (png file). The image can then easily be inserted in the “performance analysis document” at the particular test case paragraph.

![Text frame with placeholder CODE_Enrichment_request_elapsed-time Text frame with placeholder CODE_Enrichment_request_elapsed-time]() |

Injected service performance metric values ==> |

![Text frame with injected value for placeholder CODE_Enrichment_request_elapsed-time Text frame with injected value for placeholder CODE_Enrichment_request_elapsed-time]() |

To create the text file with service monitoring details, the JMX Monitoring API was used. For details about this API see:

Java Management Extensions (JMX) Monitoring API in Oracle Service Bus (OSB)

ServiceDomainMBean

I will now explain a little bit more about the ServiceDomainMBean and how it can be used.

The public JMX APIs are modeled by a single instance of ServiceDomainMBean, which has operations to check for monitored services and retrieve data from them. A public set of POJOs provide additional objects and methods that, along with ServiceDomainMbean, provide a complete API for monitoring statistics.

There also is a sample program in the Oracle documentation (mentioned above) that demonstrates how to use the JMX Monitoring API.

Most of the information that is shown in the Service Monitoring Details page can be retrieved via the ServiceDomainMBean. This does not apply to the Action Metrics (unfortunately). The POJO object ResourceType represents all types of resources that are enabled for service monitoring. The four enum constants representing types are shown in the following table:

|

Service Monitoring Details tab

|

ResourceType enum

|

Description

|

|

Service Metrics

|

SERVICE

|

A service is an inbound or outbound endpoint that is configured within Oracle Service Bus. It may have an associated WSDL, security settings, and so on.

|

|

Pipeline Metrics

|

FLOW_COMPONENT

|

Statistics are collected for the following two types of components that can be present in the flow definition of a proxy service.

· Pipeline Pair node

· Route node

|

|

Action Metrics

|

–

|

–

|

|

Operations

|

WEBSERVICE_OPERATION

|

This resource type provides statistical information pertaining to WSDL operations. Statistics are reported for each defined operation.

|

|

–

|

URI

|

This resource type provides statistical information pertaining to endpoint URI for a business service. Statistics are reported for each defined Endpoint URI.

|

Overview of extracting performance metrics and using them by a PowerPoint VBA module

Based on the above mentioned sample program, in Oracle JDeveloper a customized program was created to retrieve performance metrics for the MyProxyService, and more specifically for a particular list of components (“Initialization_request”, “Enrichment_request”, “RouteToADatabaseProcedure”, “Enrichment_response”, “Initialization_response”). Also an executable jar file MyProxyServiceStatisticsRetriever.jar was created via an Deployment Profile. The program creates a text file MyProxyServiceStatistics_2016_01_14.txt with the measurements and another text file MyProxyServciceStatisticsForPowerpoint.txt with specific key value pairs to be used by the PowerPoint VBA module.

Because the measurements had to be carried out on different WebLogic domains, a batch file MyProxyServiceStatisticsRetriever.bat was created where the domain specific connection credentials can be passed in as program arguments.

Conclusion

After analyzing the measurement, it became obvious that the performance lack was caused mainly by the call to the database procedure via RouteToADatabaseProcedure. So a solution was put in place whereby a caching mechanism (of pre-aggregated data) was used.

Keep in mind that with regard to Action Metrics, statistics can’t be gathered and with regard to Pipeline Metrics only Pipeline Pair node and Route node statistics can be gathered (via the ServiceDomainMBean). Luckily, in my case, the main problem was in the Route node so the ServiceDomainMBean could be used in a meaningful way.

It proved to be a good idea to partly automate the process of doing performance measurements and presenting them, because it saved a lot of time, due to the number of measurements that had to be made.

MyProxyServiceStatisticsRetriever.bat

[code language=”html”]

D:\Oracle\Middleware\jdk160_24\bin\java.exe -classpath “MyProxyServiceStatisticsRetriever.jar;D:\Oracle\Middleware\wlserver_10.3\server\lib\weblogic.jar;D:\OSB_DEV\FMW_HOME\Oracle_OSB1\lib\sb-kernel-api.jar;D:\OSB_DEVbbbbb\FMW_HOME\Oracle_OSB1\lib\sb-kernel-impl.jar;D:\OSB_DEV\FMW_HOME\Oracle_OSB1\modules\com.bea.common.configfwk_1.7.0.0.jar” myproxyservice.monitoring.MyProxyServiceStatisticsRetriever “appserver01” “7001” “weblogic” “weblogic” “C:\temp”

[/code]

MyProxyServiceStatisticsRetriever.java

[code language=”html”]

package myproxyservice.monitoring;

import com.bea.wli.config.Ref;

import com.bea.wli.monitoring.InvalidServiceRefException;

import com.bea.wli.monitoring.MonitoringException;

import com.bea.wli.monitoring.MonitoringNotEnabledException;

import com.bea.wli.monitoring.ResourceStatistic;

import com.bea.wli.monitoring.ResourceType;

import com.bea.wli.monitoring.ServiceDomainMBean;

import com.bea.wli.monitoring.ServiceResourceStatistic;

import com.bea.wli.monitoring.StatisticType;

import com.bea.wli.monitoring.StatisticValue;

import java.io.File;

import java.io.FileWriter;

import java.io.IOException;

import java.lang.reflect.InvocationHandler;

import java.lang.reflect.Method;

import java.lang.reflect.Proxy;

import java.net.MalformedURLException;

import java.text.SimpleDateFormat;

import java.util.Arrays;

import java.util.Date;

import java.util.HashMap;

import java.util.Hashtable;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import javax.management.MBeanServerConnection;

import javax.management.MalformedObjectNameException;

import javax.management.ObjectName;

import javax.management.remote.JMXConnector;

import javax.management.remote.JMXConnectorFactory;

import javax.management.remote.JMXServiceURL;

import javax.naming.Context;

import weblogic.management.jmx.MBeanServerInvocationHandler;

public class MyProxyServiceStatisticsRetriever {

private ServiceDomainMBean serviceDomainMbean = null;

private String serverName = null;

private Ref[] proxyServiceRefs;

private Ref[] filteredProxyServiceRefs;

/**

* Transforms a Long value into a time format that de Service Bus Console also uses (x secs y msecs).

*/

private String formatToTime(Long value) {

Long quotient = value / 1000;

Long remainder = value % 1000;

return Long.toString(quotient) + ” secs ” + Long.toString(remainder) +

” msecs”;

}

/**

* Transforms a Long value into a time format that de Service Bus Console also uses (x secs y msecs).

*/

private String formatToTimeForPowerpoint(Long value) {

Long quotient = value / 1000;

Long remainder = value % 1000;

return Long.toString(quotient) + “secs” + Long.toString(remainder) +

“msecs”;

}

/**

* Gets an instance of ServiceDomainMBean from the weblogic server.

*/

private void initServiceDomainMBean(String host, int port, String username,

String password) throws Exception {

InvocationHandler handler =

new ServiceDomainMBeanInvocationHandler(host, port, username,

password);

Object proxy =

Proxy.newProxyInstance(ServiceDomainMBean.class.getClassLoader(),

new Class[] { ServiceDomainMBean.class },

handler);

serviceDomainMbean = (ServiceDomainMBean)proxy;

}

/**

* Invocation handler class for ServiceDomainMBean class.

*/

public static class ServiceDomainMBeanInvocationHandler implements InvocationHandler {

private String jndiURL =

“weblogic.management.mbeanservers.domainruntime”;

private String mbeanName = ServiceDomainMBean.NAME;

private String type = ServiceDomainMBean.TYPE;

private String protocol = “t3”;

private String hostname = “localhost”;

private int port = 7001;

private String jndiRoot = “/jndi/”;

private String username = “weblogic”;

private String password = “weblogic”;

private JMXConnector conn = null;

private Object actualMBean = null;

public ServiceDomainMBeanInvocationHandler(String hostName, int port,

String userName,

String password) {

this.hostname = hostName;

this.port = port;

this.username = userName;

this.password = password;

}

/**

* Gets JMX connection

*/

public JMXConnector initConnection() throws IOException,

MalformedURLException {

JMXServiceURL serviceURL =

new JMXServiceURL(protocol, hostname, port,

jndiRoot + jndiURL);

Hashtable h = new Hashtable();

if (username != null)

h.put(Context.SECURITY_PRINCIPAL, username);

if (password != null)

h.put(Context.SECURITY_CREDENTIALS, password);

h.put(JMXConnectorFactory.PROTOCOL_PROVIDER_PACKAGES,

“weblogic.management.remote”);

return JMXConnectorFactory.connect(serviceURL, h);

}

/**

* Invokes specified method with specified params on specified

* object.

*/

public Object invoke(Object proxy, Method method,

Object[] args) throws Throwable {

if (conn == null)

conn = initConnection();

if (actualMBean == null)

actualMBean =

findServiceDomain(conn.getMBeanServerConnection(),

mbeanName, type, null);

return method.invoke(actualMBean, args);

}

/**

* Finds the specified MBean object

*

* @param connection – A connection to the MBeanServer.

* @param mbeanName – The name of the MBean instance.

* @param mbeanType – The type of the MBean.

* @param parent – The name of the parent Service. Can be NULL.

* @return Object – The MBean or null if the MBean was not found.

*/

public Object findServiceDomain(MBeanServerConnection connection,

String mbeanName, String mbeanType,

String parent) {

try {

ObjectName on = new ObjectName(ServiceDomainMBean.OBJECT_NAME);

return (ServiceDomainMBean)MBeanServerInvocationHandler.newProxyInstance(connection,

on);

} catch (MalformedObjectNameException e) {

e.printStackTrace();

return null;

}

}

}

public MyProxyServiceStatisticsRetriever(HashMap props) {

super();

try {

String comment = null;

String[] arrayResourceNames =

{ “Initialization_request”, “Enrichment_request”,

“RouteToADatabaseProcedure”,

“Enrichment_response”,

“Initialization_response” };

List filteredResourceNames =

Arrays.asList(arrayResourceNames);

Properties properties = new Properties();

properties.putAll(props);

initServiceDomainMBean(properties.getProperty(“HOSTNAME”),

Integer.parseInt(properties.getProperty(“PORT”)),

properties.getProperty(“USERNAME”),

properties.getProperty(“PASSWORD”));

// Save retrieved statistics.

String fileName =

properties.getProperty(“DIRECTORY”) + “\\” + “MyProxyServiceStatistics” +

“_” +

new SimpleDateFormat(“yyyy_MM_dd”).format(new Date(System.currentTimeMillis())) +

“.txt”;

FileWriter out = new FileWriter(new File(fileName));

String fileNameForPowerpoint =

properties.getProperty(“DIRECTORY”) + “\\” +

“MyProxyServiceStatisticsForPowerpoint” + “.txt”;

FileWriter outForPowerpoint =

new FileWriter(new File(fileNameForPowerpoint));

out.write(“*********************************************”);

out.write(“\nThis file contains statistics for a proxy service on WebLogic Server ” +

properties.getProperty(“HOSTNAME”) + “:” +

properties.getProperty(“PORT”) + ” and:”);

out.write(“\n\tDomainName: ” + serviceDomainMbean.getDomainName());

out.write(“\n\tClusterName: ” +

serviceDomainMbean.getClusterName());

for (int i = 0; i < (serviceDomainMbean.getServerNames()).length;

i++) {

out.write("\n\tServerName: " +

serviceDomainMbean.getServerNames()[i]);

}

out.write("\n***********************************************");

proxyServiceRefs =

serviceDomainMbean.getMonitoredProxyServiceRefs();

if (proxyServiceRefs != null && proxyServiceRefs.length != 0) {

filteredProxyServiceRefs = new Ref[1];

for (int i = 0; i < proxyServiceRefs.length; i++) {

System.out.println("ProxyService fullName: " +

proxyServiceRefs[i].getFullName());

if (proxyServiceRefs[i].getFullName().equalsIgnoreCase("MyProxyService")) {

filteredProxyServiceRefs[0] = proxyServiceRefs[i];

}

}

if (filteredProxyServiceRefs != null &&

filteredProxyServiceRefs.length != 0) {

for (int i = 0; i < filteredProxyServiceRefs.length; i++) {

System.out.println("Filtered proxyService fullName: " +

filteredProxyServiceRefs[i].getFullName());

}

}

System.out.println("Started…");

for (ResourceType resourceType : ResourceType.values()) {

// Only process the following resource types: SERVICE,FLOW_COMPONENT,WEBSERVICE_OPERATION

if (resourceType.name().equalsIgnoreCase("URI")) {

continue;

}

HashMap proxyServiceResourceStatisticMap =

serviceDomainMbean.getProxyServiceStatistics(filteredProxyServiceRefs,

resourceType.value(),

null);

for (Map.Entry mapEntry :

proxyServiceResourceStatisticMap.entrySet()) {

System.out.println(“======= Printing statistics for service: ” +

mapEntry.getKey().getFullName() +

” and resourceType: ” +

resourceType.toString() +

” =======”);

if (resourceType.toString().equalsIgnoreCase(“SERVICE”)) {

comment =

“(Comparable to Service Bus Console | Service Monitoring Details | Service Metrics)”;

} else if (resourceType.toString().equalsIgnoreCase(“FLOW_COMPONENT”)) {

comment =

“(Comparable to Service Bus Console | Service Monitoring Details | Pipeline Metrics )”;

} else if (resourceType.toString().equalsIgnoreCase(“WEBSERVICE_OPERATION”)) {

comment =

“(Comparable to Service Bus Console | Service Monitoring Details | Operations)”;

}

out.write(“\n\n======= Printing statistics for service: ” +

mapEntry.getKey().getFullName() +

” and resourceType: ” +

resourceType.toString() + ” ” + comment +

” =======”);

ServiceResourceStatistic serviceStats =

mapEntry.getValue();

out.write(“\nStatistic collection time is – ” +

new Date(serviceStats.getCollectionTimestamp()));

try {

ResourceStatistic[] resStatsArray =

serviceStats.getAllResourceStatistics();

for (ResourceStatistic resStats : resStatsArray) {

if (resourceType.toString().equalsIgnoreCase(“FLOW_COMPONENT”) &&

!filteredResourceNames.contains(resStats.getName())) {

continue;

}

if (resourceType.toString().equalsIgnoreCase(“WEBSERVICE_OPERATION”) &&

!resStats.getName().equalsIgnoreCase(“MyGetDataOperation”)) {

continue;

}

// Print resource information

out.write(“\nResource name: ” +

resStats.getName());

out.write(“\n\tResource type: ” +

resStats.getResourceType().toString());

// Now get and print statistics for this resource

StatisticValue[] statValues =

resStats.getStatistics();

for (StatisticValue value : statValues) {

if (resourceType.toString().equalsIgnoreCase(“SERVICE”) &&

!value.getName().equalsIgnoreCase(“response-time”)) {

continue;

}

if (resourceType.toString().equalsIgnoreCase(“FLOW_COMPONENT”) &&

!value.getType().toString().equalsIgnoreCase(“INTERVAL”)) {

continue;

}

if (resourceType.toString().equalsIgnoreCase(“WEBSERVICE_OPERATION”) &&

!value.getType().toString().equalsIgnoreCase(“INTERVAL”)) {

continue;

}

out.write(“\n\t\tStatistic Name – ” +

value.getName());

out.write(“\n\t\tStatistic Type – ” +

value.getType());

// Determine statistics type

if (value.getType() ==

StatisticType.INTERVAL) {

StatisticValue.IntervalStatistic is =

(StatisticValue.IntervalStatistic)value;

// Print interval statistics values

out.write(“\n\t\t\tMessage Count: ” +

is.getCount());

out.write(“\n\t\t\tMin Response Time: ” +

formatToTime(is.getMin()));

out.write(“\n\t\t\tMax Response Time: ” +

formatToTime(is.getMax()));

/* out.write(“\n\t\t\tSum Value – ” +

is.getSum()); */

out.write(“\n\t\t\tOverall Avg. Response Time: ” +

formatToTime(is.getAverage()));

if (resourceType.toString().equalsIgnoreCase(“SERVICE”)) {

outForPowerpoint.write(“CODE_SERVICE_” +

value.getName() +

“;” +

formatToTimeForPowerpoint(is.getAverage()));

}

if (resourceType.toString().equalsIgnoreCase(“FLOW_COMPONENT”)) {

outForPowerpoint.write(“\r\nCODE_” +

resStats.getName() +

“_” +

value.getName() +

“;” +

formatToTimeForPowerpoint(is.getAverage()));

}

} else if (value.getType() ==

StatisticType.COUNT) {

StatisticValue.CountStatistic cs =

(StatisticValue.CountStatistic)value;

// Print count statistics value

out.write(“\n\t\t\t\tCount Value – ” +

cs.getCount());

} else if (value.getType() ==

StatisticType.STATUS) {

StatisticValue.StatusStatistic ss =

(StatisticValue.StatusStatistic)value;

// Print count statistics value

out.write(“\n\t\t\t\t Initial Status – ” +

ss.getInitialStatus());

out.write(“\n\t\t\t\t Current Status – ” +

ss.getCurrentStatus());

}

}

}

out.write(“\n=========================================”);

} catch (MonitoringNotEnabledException mnee) {

// Statistics not available

out.write(“\nWARNING: Monitoring is not enabled for this service… Do something…”);

out.write(“\n=====================================”);

} catch (InvalidServiceRefException isre) {

// Invalid service

out.write(“\nERROR: Invlaid Ref. May be this service is deleted. Do something…”);

out.write(“\n======================================”);

} catch (MonitoringException me) {

// Statistics not available

out.write(“\nERROR: Failed to get statistics for this service…Details: ” +

me.getMessage());

me.printStackTrace();

out.write(“\n======================================”);

}

}

}

System.out.println(“Finished”);

}

// Flush and close file.

out.flush();

out.close();

// Flush and close file.

outForPowerpoint.flush();

outForPowerpoint.close();

} catch (Exception e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

try {

if (args.length <= 0) {

System.out.println("Use the following arguments: HOSTNAME, PORT, USERNAME, PASSWORD DIRECTORY. For example: appserver01 7001 weblogic weblogic C:\\temp");

} else {

HashMap map = new HashMap();

map.put(“HOSTNAME”, args[0]);

map.put(“PORT”, args[1]);

map.put(“USERNAME”, args[2]);

map.put(“PASSWORD”, args[3]);

map.put(“DIRECTORY”, args[4]);

MyProxyServiceStatisticsRetriever myProxyServiceStatisticsRetriever =

new MyProxyServiceStatisticsRetriever(map);

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

[/code]

The VBA module

[code language=”html”]

Sub ReadFromFile()

Dim FileNum As Integer

Dim FileName As String

Dim InputBuffer As String

Dim oSld As Slide

Dim oShp As Shape

Dim oTxtRng As TextRange

Dim oTmpRng As TextRange

Dim strWhatReplace As String, strReplaceText As String

Dim property As Variant

Dim key As String

Dim value As String

Dim sImagePath As String

Dim sImageName As String

Dim sPrefix As String

Dim lPixwidth As Long ‘ size in pixels of exported image

Dim lPixheight As Long

FileName = “C:\temp\MyProxyServciceStatisticsForPowerpoint.txt”

FileNum = FreeFile

On Error GoTo Err_ImageSave

sImagePath = “C:\temp”

sPrefix = “MyproxyservciceStatistics”

lPixwidth = 1024

‘ Set height proportional to slide height

lPixheight = (lPixwidth * ActivePresentation.PageSetup.SlideHeight) / ActivePresentation.PageSetup.SlideWidth

‘ A little error checking

If Dir$(FileName) “” Then ‘ the file exists, it’s safe to continue

Open FileName For Input As FileNum

While Not EOF(FileNum)

Input #FileNum, InputBuffer

‘ Do whatever you need to with the contents of InputBuffer

‘MsgBox InputBuffer

property = Split(InputBuffer, “;”)

For element = 0 To UBound(property)

If element = 0 Then

key = property(element)

End If

If element = 1 Then

value = property(element)

End If

Next element

‘ MsgBox key

‘ MsgBox value

‘ write find text

strWhatReplace = key

‘ write change text

strReplaceText = value

‘ MsgBox strWhatReplace

‘ go during each slides

For Each oSld In ActivePresentation.Slides

‘ go during each shapes and textRanges

For Each oShp In oSld.Shapes

If oShp.Type = msoTextBox Then

‘ replace in TextFrame

Set oTxtRng = oShp.TextFrame.TextRange

Set oTmpRng = oTxtRng.Replace( _

FindWhat:=strWhatReplace, _

Replacewhat:=strReplaceText, _

WholeWords:=True)

Do While Not oTmpRng Is Nothing

Set oTxtRng = oTxtRng.Characters _

(oTmpRng.Start + oTmpRng.Length, oTxtRng.Length)

Set oTmpRng = oTxtRng.Replace( _

FindWhat:=strWhatReplace, _

Replacewhat:=strReplaceText, _

WholeWords:=True)

Loop

oShp.TextFrame.WordWrap = False

End If

Next oShp

sImageName = sPrefix & “-” & oSld.SlideIndex & “.png”

oSld.Export sImagePath & “\” & sImageName, “PNG”, lPixwidth, lPixheight

Next oSld

Wend

Close FileNum

MsgBox “Gereed”

Else

‘ the file isn’t there. Don’t try to open it.

End If

Err_ImageSave:

If Err 0 Then

MsgBox Err.Description

End If

End Sub

[/code]

MyProxyServiceStatistics_2016_01_14.txt

[code language=”html”]

*********************************************

This file contains statistics for a proxy service on WebLogic Server appserver01:7001and:

DomainName: DM_OSB_DEV1

ClusterName: CL_OSB_01

ServerName: MS_OSB_01

ServerName: MS_OSB_02

***********************************************

======= Printing statistics for service: MyProxyService and resourceType: SERVICE (Comparable to Service Bus Console | Service Monitoring Details | Service Metrics) =======

Statistic collection time is – Thu Jan 14 11:26:00 CET 2016

Resource name: Transport

Resource type: SERVICE

Statistic Name – response-time

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 552 msecs

Max Response Time: 1 secs 530 msecs

Overall Avg. Response Time: 0 secs 820 msecs

=========================================

======= Printing statistics for service: MyProxyService and resourceType: FLOW_COMPONENT (Comparable to Service Bus Console | Service Monitoring Details | Pipeline Metrics ) =======

Statistic collection time is – Thu Jan 14 11:26:00 CET 2016

Resource name: MyGetDataOperation

Resource type: FLOW_COMPONENT

Statistic Name – Validation_request

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 0 msecs

Max Response Time: 0 secs 0 msecs

Overall Avg. Response Time: 0 secs 0 msecs

Statistic Name – Validation_response

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 0 msecs

Max Response Time: 0 secs 0 msecs

Overall Avg. Response Time: 0 secs 0 msecs

Statistic Name – Authorization_request

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 42 msecs

Max Response Time: 0 secs 62 msecs

Overall Avg. Response Time: 0 secs 52 msecs

Statistic Name – Authorization_response

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 0 msecs

Max Response Time: 0 secs 0 msecs

Overall Avg. Response Time: 0 secs 0 msecs

Resource name: Initialization_request

Resource type: FLOW_COMPONENT

Statistic Name – elapsed-time

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 0 msecs

Max Response Time: 0 secs 1 msecs

Overall Avg. Response Time: 0 secs 0 msecs

Resource name: Enrichment_request

Resource type: FLOW_COMPONENT

Statistic Name – elapsed-time

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 196 msecs

Max Response Time: 0 secs 553 msecs

Overall Avg. Response Time: 0 secs 298 msecs

Resource name: Initialization_response

Resource type: FLOW_COMPONENT

Statistic Name – elapsed-time

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 1 msecs

Max Response Time: 0 secs 3 msecs

Overall Avg. Response Time: 0 secs 2 msecs

Resource name: RouteToADatabaseProcedure

Resource type: FLOW_COMPONENT

Statistic Name – elapsed-time

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 116 msecs

Max Response Time: 0 secs 174 msecs

Overall Avg. Response Time: 0 secs 146 msecs

Resource name: Enrichment_response

Resource type: FLOW_COMPONENT

Statistic Name – elapsed-time

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 119 msecs

Max Response Time: 0 secs 411 msecs

Overall Avg. Response Time: 0 secs 230 msecs

=========================================

======= Printing statistics for service: MyProxyService and resourceType: WEBSERVICE_OPERATION (Comparable to Service Bus Console | Service Monitoring Details | Operations) =======

Statistic collection time is – Thu Jan 14 11:26:00 CET 2016

Resource name: MyGetDataOperation

Resource type: WEBSERVICE_OPERATION

Statistic Name – elapsed-time

Statistic Type – INTERVAL

Message Count: 5

Min Response Time: 0 secs 550 msecs

Max Response Time: 1 secs 95 msecs

Overall Avg. Response Time: 0 secs 731 msecs

=========================================

[/code]

MyProxyServiceStatisticsForPowerpoint.txt

[code language=”html”]

CODE_SERVICE_response-time;0secs820msecs

CODE_Validation_request;0secs0msecs

CODE_Validation_response;0secs0msecs

CODE_Authorization_request;0secs52msecs

CODE_Authorization_response;0secs0msecs

CODE_Initialization_request_elapsed-time;0secs0msecs

CODE_Enrichment_request_elapsed-time;0secs298msecs

CODE_Initialization_response_elapsed-time;0secs2msecs

CODE_RouteToADatabaseProcedure_elapsed-time;0secs146msecs

CODE_Enrichment_response_elapsed-time;0secs230msecs

[/code]

The post Doing performance measurements of an OSB Proxy Service by programmatically extracting performance metrics via the ServiceDomainMBean and presenting them as an image via a PowerPoint VBA module appeared first on AMIS Oracle and Java Blog.

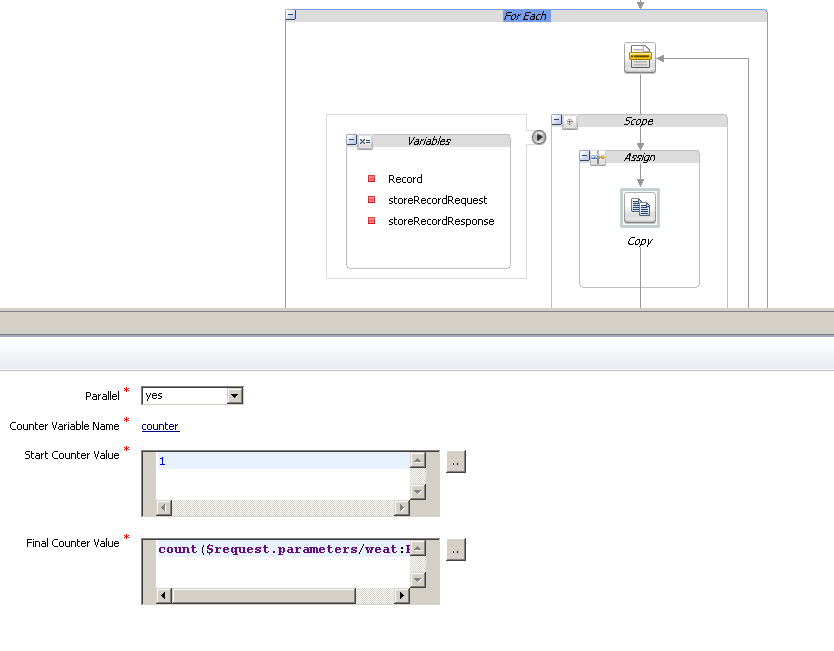

![$request.parameters/weat:Record[$counter]](http://technology.amis.nl/wp-content/uploads/2014/04/SJForEach_Copy.png)